Be the first to hear our latest news

Roboethics – the big debate

Oct 16, 2019

- A robot may not injure a human being, or, through inaction, allow a human being to come to harm

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law

Today, Asimov's Laws represent more problems and conflict to roboticists than they solve, with the ongoing debate on machine ethics. Who or what is going to be held responsible when or if an autonomous system malfunctions or harms humans?

Ethics and roboethics

Ethics is the branch of philosophy which studies human conduct, moral assessments, the concepts of good and evil, right and wrong, justice and injustice.

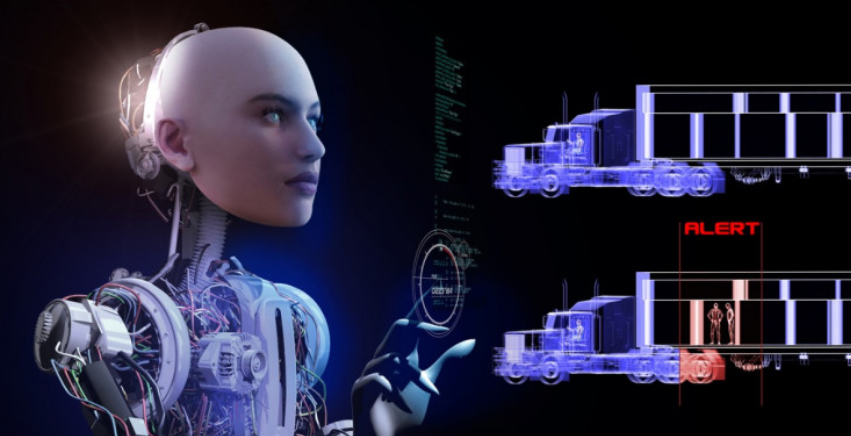

Roboethics –also called machine ethics– deals with the code of conduct that robotic designer engineers must implement in the Artificial Intelligence of a robot. Roboticists must guarantee that autonomous systems are going to be able to exhibit ethically acceptable behaviour in situations where robots or any other autonomous systems interact with humans.

Ethical issues continue to be on the rise as we have two distinct sets of robotic applications: service robots which are created to peacefully live and interact with humans, and lethal robots, created to fight in the battlefield as military robots. Military robots

Military robots

Military robots are certainly not just a thing of the present. They date back to World War II and the Cold War. Today, military robots are being developed to fire a gun, disarm bombs, carry wounded soldiers, detect mines, fire missiles, fly, and so on.

However, what kind of roboethics are going to be embedded to military robots and who is going to decide upon them? Asimov's laws cannot be applied to robots that are actually designed to kill humans. The debate continues…

Robots a greater risk than nuclear weapons

TECH billionaireElon Musk founded OpenAI in 2015 with the goal of developing AGI to be able to learn and master several disciplines. Beating the world’s best human players at the video game Dota 2 soon made the world pay attention.

However, Mr Musk has since stepped back from the AI startup due to concerns about the risks artificial intelligence poses to humanity. He claims its development poses a greater risk than nuclear weapons.

He has also issued warnings about Artificial Intelligence “bot swarms” which he warns could be the first signs of a robot takeover.

Swarm robotics theory is inspired by the behaviour of social insects such as ants, which use communication between the members of the group that build a system of constant feedback. The swarm behaviour involves constant change of individuals in cooperation with others, as well as the behaviour of the whole group.

Reaping benefits whilst avoiding pitfalls

Microsoft invested $1 billion in the venture, and boss Brad Smith has separate concerns over the rise of ‘killer robots,’ which he feels has become unstoppable.

He said the use of “lethal autonomous weapon systems” posed a host of new ethical questions, which need to be considered by governments as a matter of urgency. “Robots must not be allowed to decide on their own to engage in combat and who to kill.”

OpenAI CTO Greg Brockman allayed fears saying, “We want AGI to work with people to solve problems, including global challenges such as climate change, affordable and high-quality healthcare, and personalised education. AI has such huge potential, it is vital to research how to reap its benefits while avoiding potential pitfalls.”

Are robots capable of moral decision making?

Roboethics has to, and must, become increasingly important as we enter an era where more advanced and sophisticated robots as well as Artificial General Intelligence (AGI) are becoming more and more an integral part of our daily life.

Some believe that robots will contribute to building a better world. Others argue that robots are incapable of being moral agents and should not be designed with embedded moral-decision making capabilities. What do you think?

(Extracts taken from “Roboethics:The Human Ethics Applied to Robots”, Interesting Engineering, Sept 22nd 2019 & “Elon Musk’s AI Project to replicate the Human Brain receives $1 Billion from Microsoft”, The Independent, 23 July 2019)

Plunkett Associates thoughts…

Plunkett Associates thoughts…

Engineers work to solve a problem and the task will eventually be solved irrespective of the philosophical discussions that may be revolving around it.

We cannot uninvent things, the human mind let alone AI, works to solve challenges. Its called human nature or “progress”.

I don't believe this natural trait can (or should) be stopped but I also accept that those at the coalface are probably not the best placed people to assess the ethical consequences. So who should?